Banners advertising cybersecurity on houses, busses and everywhere. The city of San Francisco talks security in the week of RSA Conference. The event itself is really huge with many tracks in parallel. I even got lost one time at the expo which seems to be twice the size of the German it-sa. But I had to refill my stock of pens anyway after the break of in-person events caused by Corona 😉

However, I did not only bring home pens and shirts, but also new insights described in this article.

Threat Modelling made easy

Threat modelling often fails because methods are too complex. Alyssa Miller, one of the authors of the threat modelling manifesto, pointed out in her presentation that threat modelling can be easy and everyone is able to do it. We already do it every day like when deciding if we wear a mask at the conference or not. She also showed with the example in the picture above how natural language can be used to simplify threat modelling. At this point I was reminded about threat modelling requirements from the automotive cybersecurity standard ISO/SAE 21434 and whether they might be too detailed (cp. Anti-Pattern “Tendency to Overfocus” from Threat Modelling Manifesto). I will spend some more thoughts on this later on. There is definitely a tendency to focus (too?) much on details and methods in Automotive TARA (Threat Analysis and Risk Assessment).

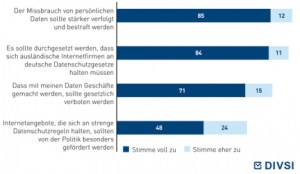

Privacy on the rise, but still way to go

Privacy is definitely on the rise, also at RSA Conference. There was a keynote with the Chief Privacy Officers (CPOs) of Google and Apple and they pointed out that privacy has highest attention in their companies. But imho there is still way to go and most tech giants (except Apple) are struggling with practical implementation because their business model does not go well together with privacy. Google’s CPO Keith Enright mentioned consent as the gold standard. I disagreed in my presentation because “Consent on Everything” is often misused to legitimate processing of personal data. That’s why it became risk #4 of the updated OWASP Top 10 Privacy Risks.

Bruce Schneier‘s new book

Bruce Schneier presented contents of his new book that will be published in January. It’s about hacking, but not only about IT hacks. It covers cheating on systems and laws in general like the tax system or emission regulation and how AI could speed up finding such hacks. There is more information on his talk in this article.

Business Information Security Officers needed

Nicole Dove pointed out that BISOs (Business Information Security Officers) are on the rise and needed to support business teams in implementing cybersecurity. They should move from a “NO” to a “YES, and” attitude to get better results. There is also a video with Nicole where she talks about the role of the BISO.

Cybersecurity Workforce Gap

The cybersecurity workforce gap and how to address it was topic of several presentations. Bryan Palma proposed in his keynote “Soulless to Soulful: Security’s Chance to Save Tech” to come up with new ideas and collaborate across company-borders to close the workforce gap. He proposed the new campaign “I do #soulfulwork” because for many employees it is important to do something good and valuable in their work which is definitely the case in the area of cybersecurity.

Disinformation

The closing keynote “The Hugh Thompson Show” started very funny, but only to discuss one of the most serious topics in today’s world later on: Disinformation and how it threatens our democratic values. The panelists proposed several ideas on how to address it, but in the end they pointed out that education and awareness will be key to be able to challenge (fake) news and validate sources. They also recommended to talk to each other in real and not only on social media.